Dear Co-Investors,

2020 will mark as one of the most eventful of our lives personally but also for SaltLight.

As we grow our firm, the festive period has been a wonderful time to think back on our entrepreneurial journey but also to reflect on how we deploy capital over the coming decades. Our entrepreneurial journey is not dissimilar to others who take the trepidatious step with a blank canvas, a good dose of naivety about how difficult success will be, and a mission to create something that adds value to clients.

Our entrepreneurial roots mean that we naturally gravitate our investments towards founder-led businesses. Many would have started as we did: a spare room, a garage, a table in the kitchen (rumour has it that Jeff Bezos specifically rented a house with a garage so that he could say that he started in one)[1]. These founders have meticulously nurtured their commercial ‘baby’ from dream to public company in the global equity markets.

Many would likely acknowledge that their journey was not one solely on their own, but it was dependent on 1,000 steps forward and sometimes 500 backward; a customer who took a chance on them, a first employee who backed a crazy idea, a large supplier who gave them a break. Our journey at SaltLight is no different.

Events like COVID-19 in 2020 test how well a business has been structured. Resilient business models, broad customer diversification, stable cash flow generation, access to liquidity are all factors that truly matter in the ‘Valley of the Shadow of Death’. We’ve found that founder-led businesses often absorb shocks better. ‘Owners’ naturally gravitate to long-term thinking with actions that seem to balance “surviving” but also “thriving” in tough times.

Conversely, cracks commonly appear in a corporatised business where a professional CEO is a “contractual care-taker” motivated by flimsy, misaligned incentives that protect career risks over long-term value creation. Balance sheets are pushed to the red line. Customer relationships are transactional. And, if it all goes wrong, the CEO throws the keys on the desk and moves on to another job.

Seemingly “aligned incentives” have become quite a catch-phrase from annual reports over the last few years. In most corporate boardrooms, this generally entails shareholder-funded options schemes that result in “heads I win, tails you lose” outcome for shareholders. At SaltLight, we categorically eat our firm’s own cooking. Most of our liquid capital is invested in the fund and therefore, over time, our capital will be rewarded (or suffer) on the same terms as yours. We, therefore, count every Rand invested with us as our own. From the bottom of our heart, we thank you for entrusting your capital with us.

Portfolio Developments

We should stress that the SaltLight SNN Worldwide Flexible fund only commenced operations in mid-November 2020 and therefore any commentary on performance is superfluous. If you have not received your factsheet, please visit our website.

Who could have predicted that a virus would virtually halt the operations of a franchise QSR business like Famous Brands? Or catapult the trajectory of Tencent and some of our semiconductor portfolio companies as digitisation demand is brought forward by several years.

In this letter, we will provide a few thoughts on Cartrack as it re-lists on the NASDAQ, our thinking about Artificial Intelligence, and how we have positioned for it.

Cartrack Holdings

Cartrack has been a significant contributor to performance across our funds.

In January, Cartrack announced that they were making a conditional offer to minorities (68% remains held by employees and the founder) to facilitate a re-listing on the NASDAQ at a cash offer price that was marginally below the pre-announcement trading price. Of interest to us, shareholders were offered a reinvestment option in the newly-listed NASDAQ security at the same offer price[2].

Cartrack has been publicly listed on the JSE since 2014 and has – shamefully – been caught up in the South African ‘sovereign discount’. As I highlighted in previous letters, it is a remarkably profitable, fast-growing, and capital-light software-as-a-service(SaaS) business BUT listed on the wrong stock exchange.

The re-listing makes rational sense for all shareholders; not only from a valuation-discount perspective but also for the reason that it will introduce an investor base that understands what it takes to build a hyper-scale SaaS business. By this I mean, low profitability now for greater shareholder value creation later.

Cartrack’s CEO, Zak Calisto is just the type of founder that we love to partner with over the long-term. To give an example: a journalist recently asked him a question whether he was going to take some money off the table on the listing and he replied:

“Despite the recent run on our share price, we still trade at a substantial discount to our peers. …The reason [why] I’ve never sold down [is that] what would I do with proceeds of the sale? Go and buy other shares that are correctly valued and sell mine that are undervalued. It doesn’t make sense”[3].

South-East Asia (SEA) is a considerably larger market opportunity than the South African market. The region has a population of ~600m with rapidly growing GDP per capita and 5x annual sales of new vehicles as South Africa.

Zak moved his family to Singapore a few years ago to build the SEA business, create an R&D centre, and tap the deep pool of engineers in the region. This is already bearing fruit, as last year they introduced telemetry hardware with a substantially cheaper manufacturing cost which has dropped customer acquisition costs meaningfully.

‘Scale Economics Shared’

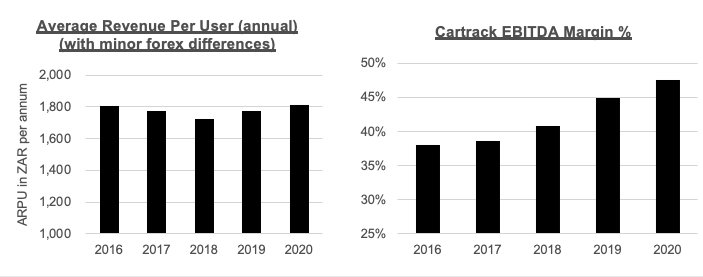

In our interactions with management over the years, we have consistently advocated that Cartrack should be investing more heavily in scale growth with the realisation that the stellar historic profitability will be temporarily dampened. Don’t take this statement the wrong way, we’re delighted with profitable growth (21% CAGR over the last five years).

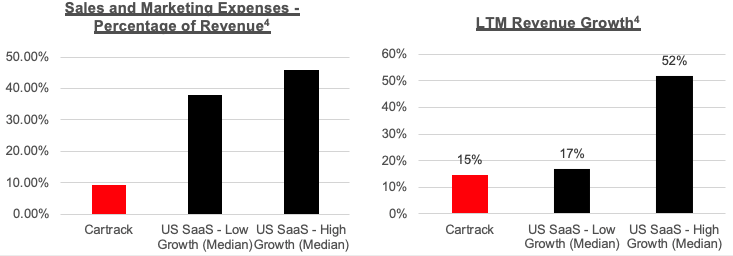

If we compare current sales and marketing spend to the US SaaS universe, Cartrack spends a quarter to achieve similar ‘low’ growth rates. However, given that the APAC business is only a $20m business, they’re not even making a dent in the total addressable market (TAM)[4].

Profitability or Scale?

A key point that we should make is that Zak has not raised his subscription prices since listing in 2014 yet EBITDA margins continue to expand. Each year he re-invests “scale benefits” by delivering more perceived value to subscribers at the same price point. The result is that customers stay well past their contractual 36-month period.

But does it necessarily make sense to be fabulously profitable at the cost of higher TAM penetration? Could APAC subscriber growth be 2-3x the current rate?

Cartrack’s subscriber acquisition costs have a quick payback period and any additional fixed costs of adding another subscriber are almost negligible. In this context, the calculus of generating shareholder value is relatively simple: increase market share (i.e., scale-up) and widen the gap between perceived customer surplus and the contribution margin per subscriber. The long-term result is a widening moat and durable returns on capital.

The perceived customer surplus metric is an important point of leverage (and certainly won’t reflect in the financial accounting). This is where the customer feels like they’re getting more value than they are actually paying for. How does Cartrack pay for this consumer surplus? Well, from operating leverage from scale and keeping existing customers around longer than their contractual term.

Now, for a smaller competitor to compete, they only have a few choices on offer to earn comparable returns on capital and deliver the same perceived value:

- Charge a higher subscription price to earn the same incremental margin (and therefore the same return on capital) or;

- Charge the same price as Cartrack but accept a lower margin (and therefore earn lower returns on capital) or;

- ‘Buy scale’ by aggregating smaller competitors to operate on equal terms.

The challenge for Cartrack at this moment is that these advantages don’t exist in every market that they operate in and therefore we believe that management should aggressively invest to build up local distribution (particularly installers) and operational capacity.

The reality is that Cartrack is not a pure ‘digital’ SaaS business where customer acquisition is a matter of adding an app or signing up to a website. It has a physical component to distribution whereby an installer needs to physically wire the device into the subscriber’s vehicle. This is a ‘feature’ and ‘bug’ as any competitor is required to have the same infrastructure. However, this is a frictional hurdle in geographic hyper-scaling and unfortunately, until vehicle OEMs install their own tracking devices, can only scale linearly.

Network Effects

There is a more desirable realm that we often daydream about called ‘SaaS Nirvana’. SaaS Nirvana truly ignites non-linear hypergrowth and establishes an undoubted moat. This is when a SaaS business is able to introduce ‘network effects’ onto its platform. In the SaaS world, this is a condition where each new subscriber adds more value to another subscriber just by joining or using certain parts of the platform. Our ruminations lead us to a scenario where Cartrack offers a marketplace (app or data) on top of their installed base of tracking devices or their software acts as an interface between other modular vehicle IoT devices[5].

This outcome is wishful thinking right now until ‘scale’ becomes globally established. However, we should note that Cartrack is experimenting along these lines by repurposing its internal CRM software to be externally customer-facing. It’s also introducing a vehicle marketplace (given 1.2m vehicles on its platform) and a communicator between fleet managers and drivers. These adjacencies may have considerable ‘optionality’ for shareholders down the line. Meanwhile, we hope that management puts their foot down on scale initiatives.

Cloud, AI, and Selling Shovels to Gold Prospectors

It is worth discussing our thoughts on some of our offshore portfolio investments.

Our research indicates that we’re in the early innings of a technology structure that will form the bedrock of mass-adoption AI applications.

As a side note, your analyst has been tinkering with machine learning algorithms for over a decade (before Jim Simons calls us up, we must caution readers that our skills are considered "hobbyist" at best).

The true ‘explosion’ in AI adoption genuinely began in 2015 as compute capability radically improved and ‘deep learning’ algorithms passed from academia into the real world[6]. AI is an incredibly interesting yet technical domain that we think has passed the ‘hype’ stage to be an obligatory component of “future-proofing” business operations.

We’re old enough to reminisce about the early stages of the Internet where companies appointed an eager “e-something” executive who would tinker in an R&D division whilst the ‘real’ executives carried on with their merry money-making in the bricks-and-mortar divisions. It was quite clear a decade later that those companies who made significant investments and shifted their models adapted for the digitisation forces, those that were complacent (or too late to realise the shift) were disrupted and faced existential crises.

We believe that Artificial Intelligence (AI) is broadly in a similar moment today. The one meaningful difference between AI and the Internet-era is that level of domain knowledge to be competent is considerably higher (hence we remain AI Luddites). Today, a company would need to hire a PhD-level math graduate rather than a curious self-taught teenager of the Internet age.

How to See the AI Opportunity

We tend to approach industry analysis by using first-principles techniques. In previous letters, we’ve written about systems theory that we have found helpful in unleashing understanding. In this case, conceptualising the building blocks of hardware and software helps assimilate the broad predictions that we need to make on AI opportunities.

If you tend to refer technical discussions to your teenage son, then you may want to skip ahead to the section labeled “AI for Dummies”. For those who love the detail, here we go:

Generally, compute and network systems obey a centralised vs. decentralised regime. The primary reason for this is that one technological component of the system usually remains a bottleneck. Frequently over the decades, ‘connectivity’ (speeds or cost) is the culprit, and this forces what would have been integrated computer processing to a distributed structure between physical devices. To use a simplistic, modern-day example, an email application runs on your laptop but emails are stored on a server somewhere. Technically speaking, processing happens either at the server or what is called an ‘edge’ device that could be a mobile phone, tablet, PC, fridge, drone, or someday, a household robot.

In each regime de jour, businesses aggressively spent IT budgets to remain competitive in their respective industries. As projects were completed (usually behind schedule and over budget) innovations in newer components of the compute infrastructure resulted in performance enhancements. Naturally, one of the older components becomes a bottleneck that the system needs to adapt to. An example would be CPU processing speeds would improve but data storage became a limitation.

| Decade | Example | Regime |

|---|---|---|

| 1970’s | Mainframes the size of rooms with ‘dumb terminals’ | Centralised |

| 1980’s | Personal Computers with centralised network servers that handled storage | Decentralised |

| 1990’s | Desktop PC/Internet era | Decentralised |

| 2000’s | PC/Mobile/Internet era | Decentralised |

| 2010’s | Cloud / on-premise servers | Centralised |

| 2020’s | Cloud and edge device AI era | Hybrid |

As an aside, at SaltLight we run all our IT on the cloud through AWS. We run data collection activities overnight as well as portfolio management systems 24/7. Our infrastructure costs continue to drop as AWS introduces new products that convert fixed costs to variable ones (oh, another application of Scale Economics Shared!). At first, we started with a fixed-cost ‘provisioned’ server running 24/7. As AWS increased functionality, we could then spin up a server for 5 minutes whilst the collection tasks were carried out and then shut it down when the tasks were completed. We've recently moved many of our tasks to 'serverless' applications which means our various functions run on demand and we are now charged on a per-second basis.

In 2006, Amazon launched Amazon Web Services (AWS), its public cloud Infrastructure as a Service (IaaS) offering. AWS coupled with low-performance smartphones ushered back the centralised regime back in vogue again. Crucially, adoption among start-ups accelerated because the economics of the cloud converted what was previously a capital-intensive line item for any new business into a variable pay-as-you-go model. A startup could easily buy more capacity as it grew.

It’s been 14 years since AWS started and cloud adoption has been rapidly expanding. IDC forecasts cloud spend will surpass $1trn by 2024 and still grow at 25% CAGR[7]

Of course, now we’re no longer just using PCs but all sorts of devices to connect to the cloud. Edge devices (laptops/smartphones/tablets) and the Internet of Things (IoT) devices (fridges, drones, Amazon Alexa) are all now dependent on bi-directional communication with the cloud.

Remember that I said there was usually a bottleneck somewhere. The issue has become connectivity again. Connectivity between cloud and edge devices, or connectivity between data centres. The volume of data is just becoming too much for mobile or fibre connectivity. 5G, to some extent, promises to alleviate some of the bandwidth bottlenecks however it is nowhere near the level that is needed for higher-level AI application. Therefore, the system has to devolve again and distribute processing between devices.

Dummies Guide to AI

Back to AI. As with everything in technology, the promise and subsequent reality follow through what Gartner describes as a ‘hype’ cycle. In AI’s case, as these problems have been tackled, we believe that the ‘hype gap’ has been steadily narrowing.

Without going into too many technical details, AI will certainly require a hybrid system where ‘training’ will occur at the central server (cloud) and ‘inference’ will happen either on the cloud or an edge device.

Our research approach has been to seek out where the bottlenecks are, who solves them, how many businesses can currently solve them and how long could a competitive advantage, if any, last.

This next discussion is rather technical and to avoid readers being lost in jargon, it is helpful to use an example:

Cat vs. Dog?

One of the early AI applications developed was image recognition. A deep learning model could process a picture of an animal and tell you whether it is an image of a cat or a dog. The image recognition model would have been ‘trained’ using thousands of pictures of cats and dogs such that the algorithm ‘learns’ to recognise the features of a cat/dog. This is called the training part of the process[8].

Once the model with its parameters (simplistically cat vs dog colours, dimensions etc), had been defined, the developer could deploy the model to a mobile phone app that used the phone’s camera. The mobile app would need to do some minor image processing based on what was captured however it would not need to reprocess the thousands of dog/cat pictures to conclude that the image was of a cat (this activity is called ‘inference’).

As mentioned, our thinking has been around the inputs, outputs, constraints and bottlenecks. We have simplistically determined a few key learnings:

- AI models are exceptionally data hungry (as I will illustrate below). Model performance depends on the (1) number of parameters, (2) the size of a dataset and the (3) amount of compute to train the model.

- And, if inference can’t be performed on an edge device, the input data is required to be transferred to the cloud for processing. If a timeous response is needed, such as a voice-controlled device, data transferals are a fundamental bottleneck in the system. Think about when you give instructions to your Siri device. There is a noticeable delay when Siri responds (yes, it appears that we seem to have personified AI models already).

Artificial Human-like Intelligence or Digital Tool?

‘Deep Learning’ AI models are based on a similar process that the brain follows. A data scientist feeds it petabytes of data and it starts to identify patterns without human intervention. In 2012, the innovation around the deep learning model to perform static image recognition was a technological leap.

Since then, AI models and their day-to-day applications have rapidly evolved: we have audio-recognition AI (Alexa and Siri) and we’re fast approaching natural language processing that produces human-like text. GPT-3 is able to write its own poetry (and perhaps, one day, future versions will write our investor letters)!

GPT-3 is currently the most advanced natural language processing model and has approximately 175bn parameters (there are rumours that Google has a 1,600 bn parameter model). On an apples-to-apples basis, the human brain is believed to have 100,000bn parameters (roughly 571x GPT-3).

Many readers will breathe a sigh of relief that computers are not quite ready to approach the feared ‘singularity’ event, however, we should point out that the previous GPT-2 model launched only a year earlier with 1.5bn parameters (116x smaller than GPT-3). At the rate at which data and compute capacity is increasing, AI could catch up with human-level parameters within a decade.

If you’re still awake, this background begs a relevant question for our purposes:

How do we invest in an unknown future?

AI all sounds extremely promising, but can we make rational capital allocation bets that create value for our clients? Our research has focused on mapping out the inputs, outputs, learning about what is difficult and what is easy to compete in AI. History has taught us that picking technological ‘winners’ is an extremely difficult endeavour (who would have predicted that Google would have eclipsed Yahoo in the late 1990s)?

We’ve found that a more profitable approach in a nascent technological phase is to follow the wise adage of “selling shovels to hopeful prospectors in a gold rush“. Our current portfolio investments have focused on the companies selling the inputs to these “AI prospectors” in search of AI leadership. At this stage, we’ll refrain from publicly disclosing the specific companies that we have invested in to avoid commitment bias and protect some of our IP.

However, we can make hypotheses about some broad predictions:

- Unstructured data produced will explode in parallel with the growth of edge devices (IoT and automotive applications, in particular).

- AI models need vast sums of storage, compute and memory inputs to improve model performance.

- There will likely be mega-AI monopolies

The last point is an important one. There is one element of industry structure that we think will help contextualise industry returns on capital. If our systematic analysis and our hypotheses are correct, it leads us to believe that there will be an extreme concentration in the hands of a few mega-AI tech monopolies (some already here and perhaps some to come).

- The company with the most data will likely offer the best AI applications.

- The company with the most accurate models will be able to charge the most.

- The company with the deepest hardware and software integration that results in differentiated performance will attract the deepest AI problems to solve.

This creates a feedback loop of better model training, better inference performance and better outcomes that attracts more data and so the loop starts again.

Our portfolio construction will be continuously adjusted to the predictions that we need to make to justify a valuation vs. the competitive position a particular supplier has in the value chain. We have no idea around the timing of when these portfolio investments will bear fruit, but we are fairly confident that over a decade, we should do relatively well if our thesis is correct.

******

Housekeeping

The SaltLight SNN Worldwide Flexible Fund (“SLTWWF”) formally launched on 11 November. Thereafter, there was a process to transfer existing client accounts into the fund and finalise some trading accounts. All told, we were only fully operational in early December. Therefore, in respect to the SLTWWF this quarter has been an ‘implementation’ quarter rather than a fully invested capital allocation.

If you would like to receive our investor letters in future, please feel free to join our distribution list here.

Once again, we look forward to the opportunity to be a steward of your capital. As always, please feel free to get in touch to correct any technical inconsistencies in our analysis or if you have any other questions.

Warmly,

David Eborall

Portfolio Manager

Disclaimer

Collective investment schemes are generally medium to long-term investments. The value of participatory interest (units) or the investment may go down as well as up. Past performance is not necessarily a guide to future performance. Collective investment schemes are traded at ruling prices and can engage in borrowing and scrip lending. A Schedule of fees and charges and maximum commissions, as well as a detailed description of how performance fees are calculated and applied, is available on request from Sanne Management Company (RF) (Pty) Ltd (“Manager”). The Manager does not provide any guarantee in respect to the capital or the return of the portfolio. The Manager may close the portfolio to new investors in order to manage it efficiently according to its mandate. The Manager ensures fair treatment of investors by not offering preferential fee or liquidity terms to any investor within the same strategy. The Manager is registered and approved by the Financial Sector Conduct Authority under CISCA. The Manager retains full legal responsibility for the portfolio. FirstRand Bank Limited, is the appointed trustee. SaltLight Capital Management (Pty) Ltd, FSP No. 48286, is authorized under the Financial Advisory and Intermediary Services Act 37 of 2002 to render investment management services.

[1] Dot.Con: How America Lost Its Mind and Money in the Internet Era, Cassidy, John (p. 124).

[2] At this point, the difference between the current market price and the cash offer price at R42 per share represents a ‘call option’ on a NASDAQ SaaS valuation on re-listing

[3] Business Day, 14 January 2021, https://www.businesslive.co.za/fm/money-and-investing/2021-01-14-cartrack-bright-lights-big-city/

[4] Source: Company Reports, Jamin Ball, Clouded Judgement 1.22.21

[5] Potentially this could be the Human Machine Interface (HMI) of IoT devices in the vehicle

[6] Deep Learning is AI that the computer identifies patterns directly from data. Supervised learning is where the algorithm relies on human-prepared data in the training process and then applies it to an inference problem.

[7] Source, IDC https://www.idc.com/getdoc.jsp?containerId=prUS46934120

[8] Adventurous readers can download a Dogs vs. Cats Training Dataset from Kaggle for free here. For the even more adventurous, an audio recognition dataset is available to recognize whether it is a cat or dog making a sound (link).